By Kazuhito Matsuda (@kazmaz11)

Local storage in Kubernetes means storage devices or filesystems available locally on each node server. This article briefly reviews existing local storage solutions in Kubernetes, then introduces a new storage plugin named TopoLVM which is a kind of local storage provisioner featuring dynamic provisioning and capacity-aware scheduling.

Contents:

- Why we need a new provisioner for local storage

- Related works

- CSI plugin: TopoLVM

- Getting started

- Summary & future works

Why we need a new provisioner for local storage

In Kubernetes, handling persistent data is one of the major difficult problems because Pod can be run anywhere while persistent data exist in a certain location. To liberate from the limitation of data location, we can use cloud storages (e.g. S3, EBS, GCS, GPD), on-premise distributed storage system (e.g. Ceph), and of course, traditional NFS.

However, these kinds of storage are often expensive and have limited performance compared to local storage. Although local storage does not provide fault-tolerance in general, it can still be a reasonable choice for software that can provide redundancy by itself or do not need redundancy. Examples of such applications include MySQL, Elasticsearch, and interestingly distributed storage such as Ceph. Although there are existing local storage solutions in Kubernetes, they have difficulties to be used properly in production environments, as described later.

Since 1.13, Container Storage Interface (CSI) support is generally available in Kubernetes. Following the CSI definition, we can leverage Kubernetes' storage features, for example:

- Create/delete and attach/detach via PVC/PV

- Topology aware scheduling

- Online resizing, ephemeral storage, and snapshots of volumes

So, we decided to develop TopoLVM, a new CSI plugin for local storage that dynamically provisions volumes using LVM. It implements dynamic volume provisioning, raw block volumes, filesystem metrics, and will implement online volume resizing and ephemeral volumes.

Related works

Before explaining our CSI plugin, let's take a quick look at existing ways about local storage usage in Kubernetes, that is:

- hostPath

- Local persistent volume

The most popular and simple way is hostPath. When we write hostPath setting in manifests, the container can access the specified directory in the host filesystem. However, it is difficult to use for stateful applications properly because the Pod scheduler does not take into account where Pod stored data.

From Kubernetes 1.14, we can use local persistent volume. It enables Pod scheduling to allocate to the node where PV exists. However, we need to create/delete PV with a local path manually, which harms the agileness of volume utilization. In other words, we want a dynamic provisioner to ease the lifecycle management of PVs.

CSI plugin: TopoLVM

Architecture

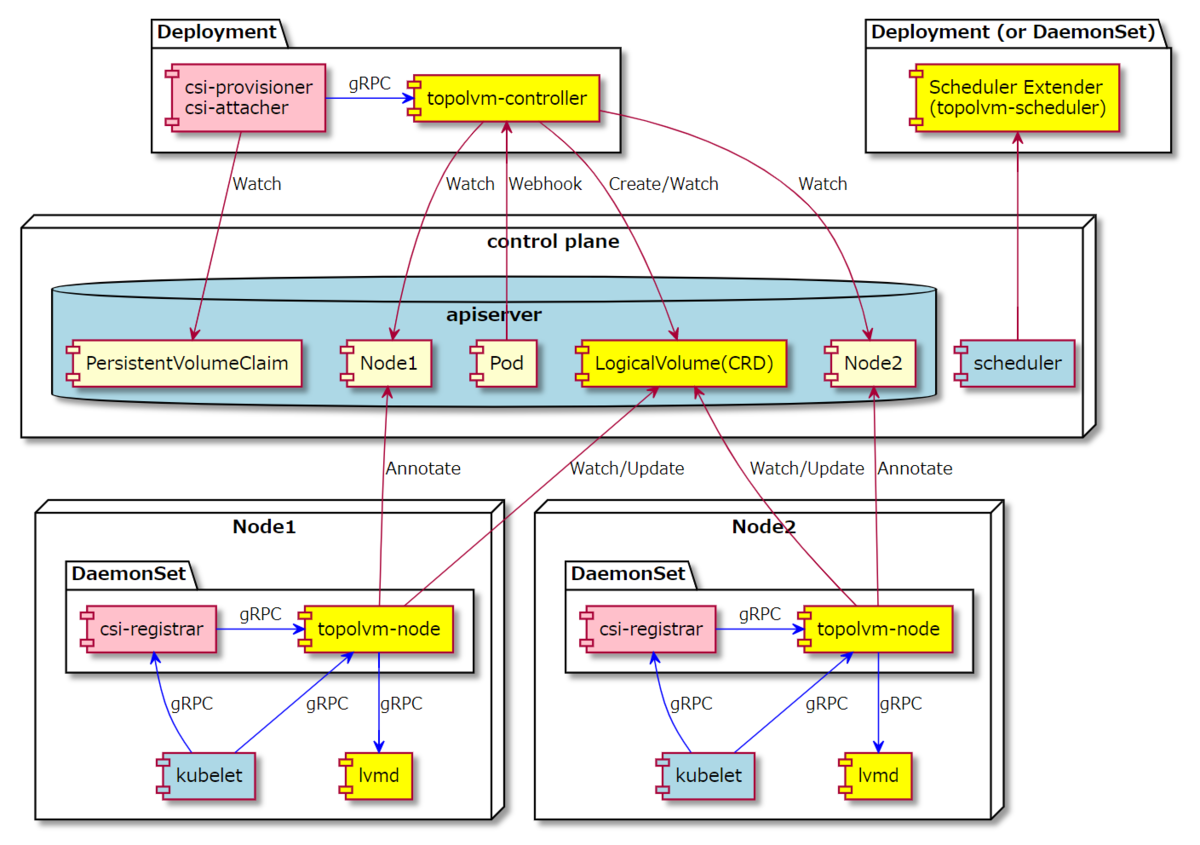

TopoLVM is based on CSI, therefore the architecture follows the one described in Kubernetes CSI Developer Documentation.

TopoLVM components are:

topolvm-controller: CSI controller service.topolvm-scheduler: A scheduler extender for TopoLVM.topolvm-node: CSI node service.lvmd: gRPC service to manage LVM volumes.

You can see the detailed architecture at Design notes.

Features

- Dynamic provisioning: Volumes are created dynamically when

PersistentVolumeClaimobjects are created. - Raw block volume: Volumes are available as block devices inside containers.

- Topology: TopoLVM uses CSI topology feature to schedule Pod to Node where LVM volume exists.

- Extended scheduler: TopoLVM extends the general Pod scheduler to prioritize Nodes having larger storage capacity.

- Volume metrics: Usage stats are exported as Prometheus metrics from

kubelet.

Detail of each feature can be found in topolvm/docs.

Key of the dynamic provisioning: Capacity-aware scheduling

Thanks to LVM, TopoLVM can handle heterogeneous nodes that consist of various numbers/sizes of storage devices via making single VG. TopoLVM creates an LV from the VG and passes it to Kubernetes as PV, so dynamic provisioning comes available. Besides, TopoLVM can handle requests to make both FileSystem and Block volume modes defined in PVC.

However, to realize dynamic provisioning with local storage, one more necessary component is required, that is, capacity-aware scheduling. It means Pods with PVC must be scheduled based on the remaining capacity of each node. Otherwise, a Pod could be scheduled to a node that does not have enough capacity, and never start because PV would not be created. Naturally, the capacity information is also important for online resizing.

To manage storage capacity via Kubernetes, topolvm-node annotates Node resource. This annotation is updated whenever the available bytes in VG is changed. Besides, when a Pod with PVC is created, topolvm-controller adds the request capacity to the Pod spec. topolvm-scheduler filters out nodes whose available bytes in VG is less than the requested size, and then it scores the rests so that nodes with more available bytes are ranked higher.

Getting started

Let's follow a quick example to use TopoLVM.

Install TopoLVM

First of all, you can create a Kubernetes cluster with kind. The recommended environment is Ubuntu 18.04 and you must install Docker before running them.

$ git clone https://github.com/cybozu-go/topolvm.git $ cd topolvm/example $ make setup $ make run

Then you can see TopoLVM is installed.

$ export KUBECONFIG=$(kind get kubeconfig-path) $ kubectl get storageclass

In the next step, we try to use PV by actual applications.

Elasticsearch with TopoLVM

Elasticsearch can be deployed using Elastic Cloud on Kubernetes (ECK). Although ECK provides the storage plugin that can do dynamic provisioning, it does not support capacity-aware scheduling.

So, let's deploy Elasticsearch with TopoLVM.

$ kubectl apply -f https://download.elastic.co/downloads/eck/1.0.0-beta1/all-in-one.yaml # wait a moment for coming up ECK $ kubectl -n elastic-system logs -f statefulset.apps/elastic-operator

Next, you need to create a manifest to deploy Elasticsearch. Note that storageClassName must be set as topolvm-provisioner as you have already seen in the installation step.

# elasticsearch.yaml apiVersion: elasticsearch.k8s.elastic.co/v1beta1 kind: Elasticsearch metadata: name: quickstart spec: version: 7.4.2 nodeSets: - name: default count: 3 config: node.master: true node.data: true node.ingest: true node.store.allow_mmap: false volumeClaimTemplates: - metadata: name: elasticsearch-data spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi storageClassName: topolvm-provisioner

Finally, apply the manifest to your cluster.

$ kubectl apply -f elastcisearch.yaml

After the Elasticsearch instance has created, you can confirm the LV PVs created by TopoLVM.

$ kubectl get pvc,pv NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/elasticsearch-data-quickstart-es-default-0 Bound pvc-01c8da19-c7f9-4702-b4bd-f9d027d91953 1Gi RWO topolvm-provisioner 20m persistentvolumeclaim/elasticsearch-data-quickstart-es-default-1 Bound pvc-37c45f53-5bfe-4b11-b1c9-498d2507edfb 1Gi RWO topolvm-provisioner 20m persistentvolumeclaim/elasticsearch-data-quickstart-es-default-2 Bound pvc-a382b88b-f164-4215-9625-1151868d5791 1Gi RWO topolvm-provisioner 20m NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-01c8da19-c7f9-4702-b4bd-f9d027d91953 1Gi RWO Delete Bound default/elasticsearch-data-quickstart-es-default-0 topolvm-provisioner 20m persistentvolume/pvc-37c45f53-5bfe-4b11-b1c9-498d2507edfb 1Gi RWO Delete Bound default/elasticsearch-data-quickstart-es-default-1 topolvm-provisioner 20m persistentvolume/pvc-a382b88b-f164-4215-9625-1151868d5791 1Gi RWO Delete Bound default/elasticsearch-data-quickstart-es-default-2 topolvm-provisioner 20m $ sudo lvscan ACTIVE '/dev/myvg/07fb3a9d-f8a1-4163-841a-ec41677205ae' [1.00 GiB] inherit ACTIVE '/dev/myvg/8b7bd0fd-5564-4d88-97ba-91b704fe9c55' [1.00 GiB] inherit ACTIVE '/dev/myvg/64ba3ca7-93fa-47ea-b9df-4cccb6c65ed4' [1.00 GiB] inherit

Summary & future works

TopoLVM is the capacity-aware dynamic provisioner based on CSI. If you are interested, please visit github.com/cybozu-go/topolvm.

Shortly, we intend to implement some features that can be implemented with CSI including volume expansions and ephemeral volumes.

Also, we will provide an interesting demo to deploy Rook/Ceph with TopoLVM (now, we have sent a PR to support TopoLVM).