By Hirotaka Yamamoto (@ymmt2005)

We are proud to announce a new MySQL operator named MOCO. MOCO manages MySQL clusters on Kubernetes that replicate data using GTID-based semi-synchronous replication.

We will be migrating hundreds of MySQL instances in our production environment to Kubernetes with MOCO.

In this article, we describe the current status of existing MySQL operators and why we had to develop yet another one.

- The status of MySQL operators

- MOCO, our new MySQL operator

- Feature guide

- Designed for MySQL 8 (no support for MySQL 5.7)

- Routing and load-balancing

- Automatic failover

- Manual and automatic switchover

- Detect and quarantine instances having errant transactions

- Replication from an external source

- Mostly compatible with standalone MySQL

- Backup and restore

- Upgrading mysqld

- Metrics

- Custom my.cnf

- Auto configuration for innodb_buffer_pool_size

- Custom Pod template

- Custom Service template

- Sidecar for slow query log

- Auto configuration of PodDisruptionBudget

- Conclusion

The status of MySQL operators

We have evaluated the following MySQL operators.

- mysql/mysql-operator: Oracle's operator

- presslabs/mysql-operator: Presslabs' operator

- percona/percona-xtradb-cluster-operator: Percona's operator

The status shown here is as of June 2021.

Development status

Oracle's operator was re-created recently after two years of inactivity. The new operator is currently in a preview status.

Presslabs' operator is being developed, but the last release was almost a year ago. It does not yet support MySQL 8 and is noted as follows:

The operator is to be considered alpha and not suitable for critical production workloads.

Percona's operator is being developed actively. The last release was a month ago.

Supported MySQL products

Oracle's operator is only for MySQL InnoDB Cluster.

Presslabs' and Percona's operators are only for Percona Server for MySQL, a MySQL compatible product from Percona.

Replication methods

MySQL and its compatible product Percona Server for MySQL have a few replication methods.

- Asynchronous Replication

- Semi-synchronous Replication

- Group Replication (InnoDB Cluster)

- Galera Cluster / XtraDB Cluster

We don't dive into them here, but discuss the key differences and limitations.

With asynchronous replication, you might lose completed transactions when the source instance fails.

With properly configured semi-synchronous replication, completed transactions will not be lost after a failure in the source instance. However, the source instance may result in having errant transactions after recovering from the failure.

Errant transactions are transactions that do not exist in the new source instance, so the old source instance having errant transactions should not re-join the cluster.

Group replication prevents errant transactions by adopting Paxos-based consensus protocol. But it has a number of limitations.

We did not investigate Galera Cluster and XtraDB Cluster in detail because we found out early that they could not meet our requirements.

Oracle's operator adopts group replication.

Presslabs' operator uses asynchronous replication. There is an ongoing issue that adds support for semi-synchronous replication, though.

Percona's operator adopts XtraDB Cluster.

Why we developed MOCO

Many of our products have been relying on a single-node MySQL, so it is not easy to migrate their databases if the new database has a lot of incompatibilities compared to a single-node MySQL.

Specifically, the product development teams requested that the new database must satisfy the following conditions.

- Support MySQL 8 features

- Do not lose completed transactions after a failover

- Allow large transactions over 2 GiB

- Allow setting

innodb_autoinc_lock_modeto 1 - Support all 4 transaction isolation levels including

SERIALIZABLE

To go straight to the bottom line, existing MySQL operators do not satisfy these requirements.

InnoDB Cluster, the replication method of Oracle's operator, has a number of limitations. Among others, we could not accept the limitation to the maximum transaction size.

Presslabs' operator does not support MySQL 8 features and may lose completed transactions.

Percona's operator does not allow transactions over 2 GiB and setting innodb_autoinc_lock_mode to 1.

It's support for SERIALIZABLE isolation level remains experimental.

We had no way to satisfy our requirements except for developing a new operator ourselves.

MOCO, our new MySQL operator

So we have designed and developed MOCO, and released it under an open source license.

MOCO can manage any number of MySQL clusters consisting of 1, 3, or 5 mysqld instances.

Only one instance in each cluster is writable and called the primary instance.

The other instances are read-only and called replica instances.

Instances replicate data in real time using GTID-based loss-less semi-synchronous replication.

With a proper configuration, this replication method can prevent a loss of completed transactions after a crash of the primary instance. A drawback of this method is that the failed instance would have transactions that do not exist in other instances (= errant transactions) after recovering from the crash.

Features

MOCO provides a myriad of features to help you manage MySQL clusters, as shown below. We will later describe each of them in-depth.

- MySQL features

- Designed for MySQL 8

- Support all transaction levels including

SERIALIZABLE - Allow large transactions > 2 GiB

- Allow setting

innodb_autoinc_lock_modeto 1

- Clustering

- Single writable primary + multiple read-only replicas

- The number of instances in a cluster can be increased online

- Fast (re-)creation of replicas thanks to Clone Plugin

- Prevent a loss of completed transactions with loss-less semi-synchronization

- Asynchronous replication from an external MySQL

- Detect and quarantine instances having errant transactions

- Automatic failover when the primary instance goes down

- Manual switchover to change the primary instance to a replica

- Automatic switchover when the Pod of the primary instance is being deleted

- Load-balancing

- Backup and restore

- Fast backup using MySQL shell

- Recurrent and on-demand backup

- Point-in-Time Recovery (PiTR)

- Configurations

- Custom MySQL container image

- Custom

my.cnf - Auto tuning

innodb_buffer_pool_size - Custom Pod specification

- Custom Service specification

- Miscellaneous

- Safe online upgrading of MySQL

- Various metrics including those from

mysqld_exporter - Streaming slow query log as a sidecar container log

- PodDisruptionBudget is configured automatically

Lastly, the architecture of MOCO can handle 1,000+ clusters with a single controller.

Usage

Installing MOCO is straightforward. The following is an excerpt of the official manual to install cert-manager and MOCO.

$ curl -fsL https://github.com/jetstack/cert-manager/releases/latest/download/cert-manager.yaml | kubectl apply -f - $ curl -fsL https://github.com/cybozu-go/moco/releases/latest/download/moco.yaml | kubectl apply -f -

To create a cluster of MySQL servers, create MySQLCluster resource as follows. Please read the linked document for the detailed description of each field.

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: # The number of instances in a cluster. Choose 1, 3, or 5. # You can increase the number afterwards. replicas: 3 podTemplate: spec: containers: # Define a container named "mysqld". # You may include other containers or field settings. - name: mysqld # Image for "mysqld" should include a helper program called `moco-init`. # Pre-built images are available on https://quay.io/repository/cybozu/mysql?tag=latest&tab=tags # You can also build your own image. See https://cybozu-go.github.io/moco/custom-mysqld.html image: quay.io/cybozu/mysql:8.0.25 # The above image is run as UID=10000 GID=10000. # If the volume created from the below volumeClaimTemplates does not allow writes to this UID/GID, # set `securityContext.fsGroup` to 10000 as follows. securityContext: fsGroup: 10000 volumeClaimTemplates: # Define a volume named "mysql-data". - metadata: name: mysql-data spec: accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 100Gi

To watch how MOCO constructs a cluster, use kubectl get -w mysqlcluster.

The cluster is ready for use when HEALTHY becomes True.

$ kubectl get -w mysqlcluster test NAME AVAILABLE HEALTHY PRIMARY SYNCED REPLICAS ERRANT REPLICAS LAST BACKUP test 0 <no value> test False False 0 <no value> test False False 0 <no value> test False False 0 1 <no value> test True False 0 2 <no value> test True True 0 3 <no value>

To access the constructed MySQL cluster, use a kubectl plugin named kubectl-moco.

You can download a pre-built binary of kubectl-moco from https://github.com/cybozu-go/moco/releases/ .

Following is an example to install and set up kubectl-moco on a Linux machine.

$ mkdir -p $HOME/go/bin $ PATH=$HOME/go/bin:$PATH $ curl -fsL -o $HOME/go/bin/kubectl-moco https://github.com/cybozu-go/moco/releases/latest/download/kubectl-moco-linux-amd64 $ chmod a+x $HOME/go/bin/kubectl-moco

kubectl-moco has three sub commands: mysql, credential, and switchover.

For details, please consult the official manual.

Let's see how we can use kubectl-moco to access the primary instance and write some data.

With -it option, it executes mysql command-line tool interactively.

Since autocommit is set to 0, COMMIT is necessary to persist the written data.

$ kubectl moco -n default mysql -it -u moco-writable test

mysql> CREATE DATABASE foo;

Query OK, 1 row affected (0.00 sec)

mysql> USE foo;

Database changed

mysql> CREATE TABLE t (i INT PRIMARY KEY AUTO_INCREMENT, data TEXT NOT NULL);

Query OK, 0 rows affected (0.03 sec)

mysql> INSERT INTO t (data) VALUES ('aaa'), ('bbb');

Query OK, 2 rows affected (0.00 sec)

Records: 2 Duplicates: 0 Warnings: 0

mysql> COMMIT;

Query OK, 0 rows affected (0.00 sec)

mysql> quit

Bye

The next example is to read data from a replica instance.

$ kubectl moco -n default mysql --index 1 test -- -D foo -t -e 'SELECT * FROM t' +---+------+ | i | data | +---+------+ | 1 | aaa | | 2 | bbb | +---+------+

As you see, the data have been replicated correctly.

MOCO prepares MySQL users such as moco-readonly, moco-writable, moco-admin.

It is recommended that you create a separate user and grant minimal privilege to the user for your application.

$ kubectl moco -n default mysql -it -u moco-writable test mysql> CREATE USER 'foo'@'%' IDENTIFIED BY 'xxx'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL ON foo.* TO 'foo'@'%'; Query OK, 0 rows affected (0.00 sec)

Applications running inside a Kubernetes cluster can access MySQL thorough Services. The following is a list of Services for a MySQLCluster.

$ kubectl get svc -lapp.kubernetes.io/created-by=moco NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE moco-test ClusterIP None <none> 3306/TCP,33060/TCP 40m moco-test-primary ClusterIP 10.96.102.109 <none> 3306/TCP,33060/TCP 40m moco-test-replica ClusterIP 10.96.59.78 <none> 3306/TCP,33060/TCP 40m

To access the primary instance, use Service named *-primary.

The primary instance allows both reads and writes.

The port number 3306 is for the traditional MySQL protocol, and 33060 is for the X protocol.

To read from replica instances, use Service named *-replica.

The destination of this Service is one of the replica instances that is not delayed too much from the primary.

Structure of a MySQL cluster

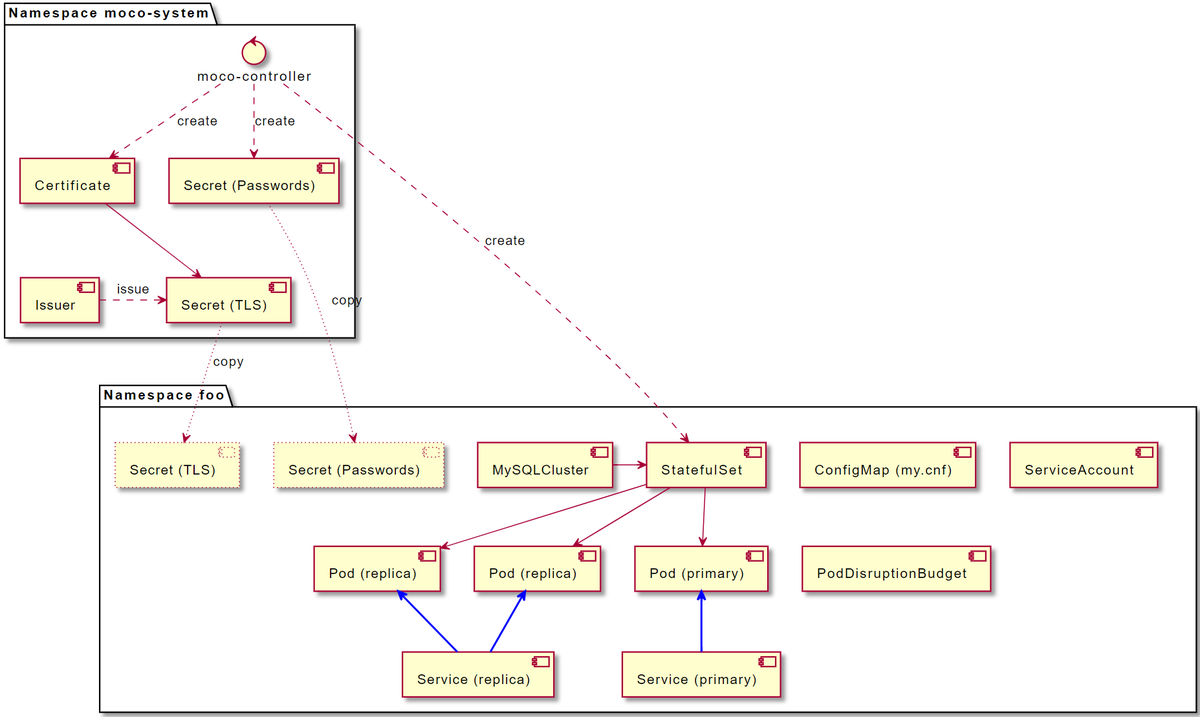

The following figure shows resources created by a component of MOCO (moco-controller) for a MySQLCluster.

At the heart of a cluster is a StatefulSet.

From the StatefulSet, Pods are created as many as specified in spec.replicas field of MySQLCluster.

Each Pod has the following sidecar containers in addition to mysqld container.

moco-agent: executesCLONE INSTANCE, check replication lag, and so on.fluent-bit: copies the contents of the slow query log file to stdout.mysqld_exporter: exports variousmysqldmetrics in Prometheus format.

moco-controller communicates with moco-agent using gRPC to request execution of CLONE INSTANCE.

This communication is protected with mTLS (mutual TLS authentication) to keep things secure.

moco-controller creates a Certificate resource in its namespace and asks cert-manager to issue TLS certificates for mTLS.

The server certificate will be copied to the namespace of MySQLCluster.

moco-controller also generates random passwords for system users like moco-admin.

It stores the generated passwords in a Secret in its namespace, and copies it to the namespace of MySQLCluster.

Feature guide

Let's take a deeper look at each of the features.

Designed for MySQL 8 (no support for MySQL 5.7)

All our MySQL instances are MySQL 8, therefore MOCO is designed solely for MySQL 8.

This greatly simplified things and allowed us to use a number of advanced functions of MySQL 8 including:

Atomic DDL

Starting with MySQL 8, data dictionary is changed to InnoDB so that DDL like

CREATE TABLEbecomes an atomic operation.mysql_upgradeis no longer necessaryStarting with MySQL 8.0.16, running

mysql_upgradeis not required aftermysqldis upgraded.-

As it reads, this is a new type of lock to block only DDL during a backup. If a backup needs to be taken from the primary instance, this would reduce impacts on applications.

Routing and load-balancing

A MySQL cluster created by MOCO has only one writable instance called the primary. It would be a hassle if applications had to switch the instance it connects every time the primary instance is changed.

MySQL Router provides routing and load-balancing to MySQL instances to resolve this problem. But we don't use it because Kubernetes provides generic routing and load-balancing function called Service.

Specifically, MOCO creates the following Services for each MySQLCluster.

moco-NAME-primaryroutes traffic to the primary instance.moco-NAME-replica: routes traffic to one of non-lagging replica instances.

The maximum allowed replication lag can be configured with spec.maxDelaySeconds field in MySQLCluster.

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: maxDelaySeconds: 180 ...

Automatic failover

A failover is to switch the primary instance to a live replica when the primary instance is down.

MOCO detects the following incidents on the primary instance and conducts a failover automatically.

- Connection failures

- Data lost

It is worth mentioning that MOCO can do a failover without fencing off the Node running the primary instance.

In general, even if an observer (moco-controller) cannot access the primary instance, applications may still be able to access it.

This happens, for example, when the network is partitioned.

In such a situation, if a replica gets promoted as a new primary and becomes writable, applications may see two writable instances and write different data to each of them. This is an incident known as split-brain.

Fencing is a method of preventing split-brains by, for example, powering down the Node server.

MOCO can safely conduct a failover without fencing thanks to semi-synchronous replication. For details, read the design document about it.

Manual and automatic switchover

A switchover is to switch the primary instance to a replica gracefully while the current primary is alive.

You can switch the primary instance manually by executing kubectl moco switchover sub command.

The following is an example to switch the primary from instance 0 to instance 1.

$ kubectl get mysqlclusters test NAME AVAILABLE HEALTHY PRIMARY SYNCED REPLICAS ERRANT REPLICAS LAST BACKUP test True True 0 3 2021-05-31T19:37:58Z $ kubectl moco switchover test $ kubectl get mysqlclusters test NAME AVAILABLE HEALTHY PRIMARY SYNCED REPLICAS ERRANT REPLICAS LAST BACKUP test True True 1 3 2021-05-31T19:37:58Z

MOCO automatically switches the primary when the Pod of the primary instance is about to be deleted.

This happens, for example, when the Pod template of MySQLCluster is modified. MOCO updates the Pod template of StatefulSet, then all Pods in the StatefulSet will be re-created one by one. Automatic switchover minimizes the downtime for applications in this case.

We encourage you to test this feature and measure the downtime in a real application.

Detect and quarantine instances having errant transactions

Errant transactions are transactions that exist only on replicas and do not exist on the primary. Under ordinal circumstances, errant transactions would not get into replicas that are read-only, but it can happen due to bugs or human intervention.

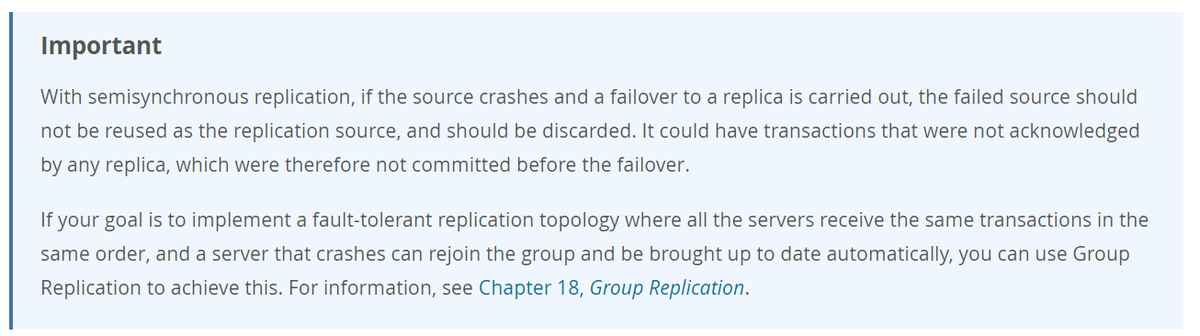

Errant transactions can also be generated as a result of failover and crash recovery in a semi-synchronous replication cluster. For this reason, the official manual of MySQL warns that the failed primary instance should be discarded with its data as follows:

MOCO does not discard the primary instance after a failover for safety reasons. Instead, it detects and quarantines instances having errant transactions. Users are responsible for discarding and re-creating such instances.

Replication from an external source

You can create a MySQLCluster that replicates data from an existing external MySQL.

The replication from an external source is asynchronous, so it can be used to replicate data between distant data centers for disaster recovery.

For details, read the user manual.

Mostly compatible with standalone MySQL

MOCO's cluster can meet the following requirements that are not supported by InnoDB Cluster or Galera Cluster.

SERIALIZABLEtransaction isolation level- Large transactions over 2 GiB

- Setting

innodb_autoinc_lock_modeto 1

Backup and restore

A MySQLCluster can be configured to take backups on a regular basis.

First, create a BackupPolicy resource as shown below. To store backup data in a S3-compatible object storage, it allows you to specify ServiceAccount to run the backup Pod or to set environment variables to pass necessary credentials.

apiVersion: moco.cybozu.com/v1beta1 kind: BackupPolicy metadata: namespace: default name: daily spec: # schedule can be specified in CRON format. schedule: "@daily" jobConfig: # Specify a ServiceAccount name that is privileged to access the object storage bucket. # If ServiceAccount is not used for the authentication, use "default". serviceAccountName: default # This is an example to access MinIO bucket env: - name: AWS_ACCESS_KEY_ID value: minioadmin - name: AWS_SECRET_ACCESS_KEY value: minioadmin # Object storage bucket information. # bucketName is required. Others are optional. bucketConfig: bucketName: moco region: us-east-1 endpointURL: http://minio.default.svc:9000 usePathStyle: true # local ephemeral volume for the working directory. # It is recommended to use a generic ephemeral volume if MySQL has large data. # https://kubernetes.io/docs/concepts/storage/ephemeral-volumes/#generic-ephemeral-volumes workVolume: emptyDir: {}

Then set spec.backupPolicyName field of MySQLCluster as follows:

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: # Name of BackupPolicy in the same namespace. backupPolicyName: daily ...

After that, MOCO creates a CronJob like this:

$ kubectl get cronjobs NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE moco-backup-test @daily False 0 <none> 11s

You can take a backup immediately with kubectl create job.

Note that if there is no data in MySQL, the backup will fail.

$ kubectl create job --from=cronjob/moco-backup-test backup-now job.batch/backup-now created $ kubectl get jobs backup-now NAME COMPLETIONS DURATION AGE backup-now 1/1 2s 5s

MySQLCluster keeps the information of the last successful backup in status.backup.

MOCO also provides metrics of backups in Prometheus format.

$ kubectl get mysqlclusters test

NAME AVAILABLE HEALTHY PRIMARY SYNCED REPLICAS ERRANT REPLICAS LAST BACKUP

test True True 0 3 2021-05-31T19:20:24Z

$ kubectl get mysqlclusters test -o json | jq .status.backup

{

"binlogFilename": "binlog.000001",

"binlogSize": 0,

"dumpSize": 20480,

"elapsed": "144.786401ms",

"gtidSet": "d7f0a656-c243-11eb-8f30-a2a44bcdb3f8:1-3",

"sourceIndex": 1,

"sourceUUID": "d6b23081-c243-11eb-8609-aecb58bb22b1",

"time": "2021-05-31T19:20:24Z",

"warnings": null,

"workDirUsage": 7886

}

MOCO uses Instance Dump Utility in MySQL Shell to take backups.

This method uses LOCK INSTANCE FOR BACKUP that allows DML while the backup is taking place.

It is also significantly faster than mysqldump or mysqlpump.

Binary log files will be stored for the second and subsequent backups. The binary log files are used for Point-in-Time Recovery.

$ kubectl create job --from=cronjob/moco-backup-test backup-now2

job.batch/backup-now2 created

$ kubectl get jobs backup-now2

NAME COMPLETIONS DURATION AGE

backup-now2 1/1 2s 2s

$ kubectl get mysqlclusters test -o json | jq .status.backup

{

"binlogFilename": "binlog.000001",

"binlogSize": 662,

"dumpSize": 20480,

"elapsed": "197.541044ms",

"gtidSet": "d7f0a656-c243-11eb-8f30-a2a44bcdb3f8:1-5",

"sourceIndex": 1,

"sourceUUID": "d6b23081-c243-11eb-8609-aecb58bb22b1",

"time": "2021-05-31T19:37:58Z",

"warnings": null,

"workDirUsage": 7879

}

To restore data from a backup, create a new MySQLCluster with spec.restore field as follows:

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: restore spec: restore: # The namespace and name of the MySQLCluster to be restored. # They are used to locate backup data in the object storage bucket. # The resource itself does not have to exist. sourceNamespace: default sourceName: test # Specify the point-in-time in RFC3339 format to restore data. restorePoint: "2021-05-31T19:37:20Z" # jobConfig is the same as BackupPolicy's. jobConfig: serviceAccountName: default env: - name: AWS_ACCESS_KEY_ID value: minioadmin - name: AWS_SECRET_ACCESS_KEY value: minioadmin bucketConfig: bucketName: moco region: us-east-1 endpointURL: http://minio.default.svc:9000 usePathStyle: true workVolume: emptyDir: {} ...

A Job will be created to restore data from backup. Once completed, the Job can be deleted anytime.

$ kubectl get jobs moco-restore-restore NAME COMPLETIONS DURATION AGE moco-restore-restore 1/1 14s 25s $ kubectl get mysqlclusters restore NAME AVAILABLE HEALTHY PRIMARY SYNCED REPLICAS ERRANT REPLICAS LAST BACKUP restore True True 0 1 <no value> $ kubectl moco mysql restore -- -D foo -t -e 'SELECT * FROM t' +---+------+ | i | data | +---+------+ | 1 | aaa | | 2 | bbb | +---+------+

You can and should check the details of restoration through the Pod log of the restore Job.

$ kubectl logs moco-restore-restore-vj2ql

{"level":"info","ts":1622490569.9764667,"msg":"waiting for a pod to become ready","name":"moco-restore-0"}

{"level":"info","ts":1622490571.98407,"msg":"waiting for the mysqld to become ready","name":"moco-restore-0"}

{"level":"info","ts":1622490581.0054455,"msg":"restoring from a backup","dump":"moco/default/test/20210531-192024/dump.tar","binlog":"moco/default/test/20210531-192024/binlog.tar.zst"}

Loading DDL, Data and Users from '/work/dump' using 4 threads.

Opening dump...

Target is MySQL 8.0.25. Dump was produced from MySQL 8.0.25

Checking for pre-existing objects...

Executing common preamble SQL

Executing DDL script for schema `foo`

[Worker000] Executing DDL script for `foo`.`t`

Analyzing table `foo`.`t`

[Worker001] foo@t@@0.tsv.zst: Records: 1 Deleted: 0 Skipped: 0 Warnings: 0

Executing user accounts SQL...

Executing common postamble SQL

Resetting GTID_PURGED to dumped gtid set

1 chunks (1 rows, 6 bytes) for 1 tables in 1 schemas were loaded in 1 sec (avg throughput 6.00 B/s)

0 warnings were reported during the load.

{"level":"info","ts":1622490581.1993299,"msg":"loaded dump successfully"}

{"level":"info","ts":1622490581.231039,"msg":"applied binlog successfully"}

{"level":"info","ts":1622490581.2483056,"msg":"restoration finished successfully"}

Upgrading mysqld

It is just as simple as replacing mysqld container image to upgrade mysqld of a MySQLCluster.

MOCO and Kubernetes will upgrade instances one by one safely with automatic switchover. It is recommended to take a backup before upgrading your cluster, though.

MOCO does not support downgrading because MySQL itself does not support downgrading.

Metrics

MOCO exports various metrics about the clustering status and the backup status of MySQLCluster.

Moreover, MOCO embeds mysqld_exporter as a sidecar of mysqld Pod to collect and export metrics of mysqld about InnoDB, performance_schema, and so on.

To enable mysqld_exporter, specify collecotrs in spec.collectors field of MySQLCluster as follows:

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: # List of collector flags w/o `collect.` prefix. # For details, see https://github.com/prometheus/mysqld_exporter/blob/master/README.md#collector-flags collectors: - engine_innodb_status - info_schema.innodb_metrics ...

Read the user manual about how to scrape the metrics and about their details.

Custom my.cnf

To change or set configuration variables for mysqld, create a ConfigMap and specify them with key-value data.

The following is an example to set long_query_time and innodb_log_file_size.

apiVersion: v1 kind: ConfigMap metadata: namespace: default name: mycnf data: long_query_time: "0" innodb_log_file_size: "10M"

Then specify the name of the ConfigMap in spec.mysqlConfigMapName field of MySQLCluster.

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: # The name of a ConfigMap in the same namespace mysqlConfigMapName: mycnf ...

Some configurations like

performance-schema-instrument need to be specified multiple times.

Such configurations are not possible with key-value format in ConfigMap.

To specify them, use _include special key.

The value of _include will be copied to my.cnf as opaque.

apiVersion: v1 kind: ConfigMap metadata: namespace: default name: mycnf data: _include: | performance-schema-instrument='memory/%=ON' performance-schema-instrument='wait/synch/%/innodb/%=ON' performance-schema-instrument='wait/lock/table/sql/handler=OFF' performance-schema-instrument='wait/lock/metadata/sql/mdl=OFF'

Auto configuration for innodb_buffer_pool_size

innodb_buffer_pool_size is critically important to the performance of MySQL.

MOCO configures it automatically when:

- ConfigMap does not include

innodb_buffer_pool_sizekey and - Either

resources.requests.memoryorresources.limits.memoryis given formysqldcontainer

For example, the MySQLCluster shown below requests 100 GiB of memory, so innodb_buffer_pool_size is set to 70 GiB.

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: podTemplate: spec: containers: - name: mysqld image: quay.io/cybozu/mysql:8.0.25 resources: requests: memory: 100Gi

Custom Pod template

As shown in the previous sections, the Pod template of mysqld can be customized freely.

The following is another example to distribute Pods among different Nodes by setting podAntiAffinity.

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: podTemplate: spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app.kubernetes.io/name operator: In values: - mysql - key: app.kubernetes.io/instance operator: In values: - test # the same as `metadata.name` topologyKey: "kubernetes.io/hostname" ...

MOCO sets app.kubernetes.io/name and app.kubernetes.io/instance labels on Pods automatically.

Custom Service template

Services for the primary and replicas can also be customized with spec.serviceTemplate field.

The following example sets the service type to LoadBalancer to allow access to MySQL from outside of Kubernetes.

apiVersion: moco.cybozu.com/v1beta1 kind: MySQLCluster metadata: namespace: default name: test spec: serviceTemplate: spec: type: LoadBalancer ...

Sidecar for slow query log

Slow query log is a feature of MySQL to record queries that took long time. It is very helpful for MySQL admins to analyze performance problems.

Slow query log cannot be output from stdout or stderr of mysqld container.

Therefore MOCO adds a sidecar container to read the contents of slow query log and output them from stdout of the sidecar container.

You can see slow query logs as follows:

$ kubectl logs moco-test-0 -c slow-log /usr/local/mysql/bin/mysqld, Version: 8.0.25 (Source distribution). started with: Tcp port: 3306 Unix socket: /run/mysqld.sock Time Id Command Argument

Auto configuration of PodDisruptionBudget

PodDisruptionBudget is a Kubernetes feature to control Pod evictions for an application so as not to break it.

MOCO creates a PodDisruptionBudget to allow up to 1 instance for 3-instance MySQLCluster, and up to 2 instances for 5-instance MySQLCluster.

Conclusion

We will migrate all MySQL instances running in our production environment to Kubernetes. MOCO is born to be the key to the migration.

We believe MOCO is one of the most feature-rich MySQL operators available today.

Please check it out.