By Hirotaka Yamamoto (@ymmt2005)

We are pleased to announce that Coil v2, the second major version of our CNI plugin for Kubernetes, is now generally available.

Coil offers bare-metal network performance plus the following features:

- Multiple address pools to create Pods with special IP addresses

- Opt-in egress NAT service on Kubernetes

- Support for IPv4-single, IPv6-single, and dual stack networks

- Various routing options

- Configuration through custom resources

Coil is designed to be easily integrated with other software such as BIRD, MetalLB, Calico, or Cilium to implement Kubernetes features like LoadBalancer or NetworkPolicy. We have been running Coil in our data centers and proudly recommend it to everyone who are seeking solutions to implement rich networking features for on-premise Kubernetes.

This is the first of a series of Coil v2 articles. The other articles are:

- Implementing highly-available NAT service on Kubernetes

- Delegating CNI requests to a gRPC server for better tracing

Table of contents

Problems solved by Coil

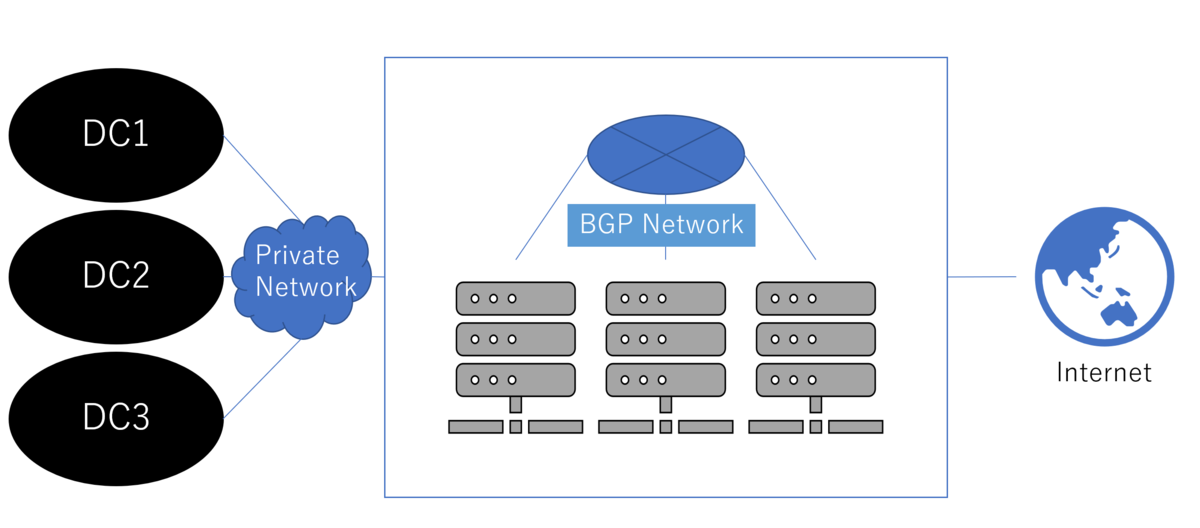

We are building cloud-native data centers using Kubernetes. As it is now common to build networking for such data centers with BGP and leaf-spine topology, we run BIRD on all the node servers. Our requirements for Kubernetes network are:

- Bare-metal performance

- Integration with the underlying BGP network

- Integration with MetalLB to implement LoadBalancer service

- Egress pods (or nodes) for the Internet and other external networks

We first looked at Calico, but it is not easily integrated with the underlying BGP network nor MetalLB. This restriction stems from the fact that Calico embeds BIRD to speak BGP itself, and there is only one BGP router that can peer with the uplink router on a node.

Coil is developed to satisfy all these requirements. Coil does not use overlays to route packets, so the performance is almost the same as bare-metal network. Coil can be integrated with the existing routers and MetalLB easily. Coil allows to run Pods as gateway servers to external networks such as the Internet.

Features

Address pools

Typically, data centers are connected with other networks, including the Internet.

Not all IP addresses in such a data center can communicate with external networks. For instance, nodes and Pods having private IPv4 addresses cannot directly communicate with servers on the Internet.

AddressPool is a feature to define a set of IP addresses for a specific purpose.

The following manifest defines the default pool for normal pods and a special ipv4-global pool.

apiVersion: coil.cybozu.com/v2 kind: AddressPool metadata: name: default spec: blockSizeBits: 5 subnets: - ipv4: 10.64.0.0/16 --- apiVersion: coil.cybozu.com/v2 kind: AddressPool metadata: name: ipv4-global spec: blockSizeBits: 0 subnets: - ipv4: 203.0.113.0/24

To specify which address pool to use for assigning IP addresses to new Pods, cluster admins have to annotate Namespace resources as follows:

$ kubectl create ns internet-egress $ kubectl annotate ns internet-egress coil.cybozu.com/pool=ipv4-global

Pods in internet-egress namespace will have an IP address from ipv4-global pool.

default pool is used for namespaces without coil.cybozu.com/pool annotation.

This design is for multi-tenancy security; only cluster admins can allow the use of special address pools.

Now, we can create an HTTP proxy gateway to the Internet by running Squid Pods in the namespace.

apiVersion: apps/v1 kind: Deployment metadata: namespace: internet-egress name: squid spec: replicas: 2 ... --- apiVersion: v1 kind: Service metadata: namespace: internet-egress name: http-proxy spec: ...

Opt-in egress NAT service

As we have seen, AddressPool allows us to run gateway Pods such as HTTP proxy to external networks.

However, there are many other application protocols other than HTTP/HTTPS.

To allow any Pod to initiate outgoing TCP/UDP communication with external networks, Coil provides a feature called opt-in egress NAT. NAT, or Network Address Translation, is a technology to allow transparent TCP/UDP communication with external networks.

The first thing you need to do to enable this feature is to create Egress custom resources.

An Egress resource represents an egress NAT gateway to a set of external networks.

An example Egress for the Internet looks like:

apiVersion: coil.cybozu.com/v2 kind: Egress metadata: namespace: internet-egress name: nat spec: replicas: 2 destinations: - 0.0.0.0/0 - ::/0

destinations field is mandatory. When a client Pod sends a packet with the destination address contained in a subnet of destinations, the packet is sent to the Egress service. The Egress service translates the packet's source address (SNAT) and sends it to the destination.

Not all Pods become the client of Egress. To become a client, Pods need to have special annotations like this:

egress.coil.cybozu.com/<namespace of Egress>: <name of Egress>

Therefore, this feature is called opt-in egress NAT. An example client pod looks like:

apiVersion: v1 kind: Pod metadata: namespace: default name: nat-client annotations: egress.coil.cybozu.com/internet-egress: nat spec: ...

Implementing highly-available NAT service on Kubernetes describes this feature in detail.

IPv4/v6 single & dual stacks

Coil supports IPv4/IPv6 dual-stack as well as single-stacks.

For dual-stack Kubernetes cluster, create a dual-stack address pool like this:

apiVersion: coil.cybozu.com/v2 kind: AddressPool metadata: name: default spec: subnets: - ipv4: 10.2.0.0/16 ipv6: fd02::/112

There are no differences in supported features between stacks. However, it should be noted that Kubernetes dual-stack support is still an alpha feature as of Kubernetes 1.19.

Routing options

Coil does not speak any routing protocol by itself. Instead, it exports the necessary information to advertise routes from a node to an unused Linux kernel routing table. This design allows you to use any routing software and/or protocol.

For environments where all the nodes are connected in a layer-2 network, Coil provides an option to program inter-node routing by itself (without speaking a routing protocol). Thanks to this option, Coil can be run without routing software.

Below is an example configuration for BIRD 2.0 to import the necessary routing information of Coil:

# export4 is a table to be used for routing protocol such as BGP

ipv4 table export4;

# Import Coil routes into export4

protocol kernel 'coil' {

kernel table 119; # the routing table coild exports routes.

learn;

scan time 1;

ipv4 {

table export4;

import all;

export none;

};

}

# Import routes from external routers into master4, the main table for IPv4 routes

# Routes from "coil" are excluded.

protocol pipe {

table master4;

peer table export4;

import filter {

if proto = "coil" then reject;

accept;

};

export none;

}

# Reflect routes in master4 into the Linux kernel

protocol kernel {

ipv4 {

export all;

};

}

Comparison with other software

The table below compares the features of Coil with other well-known CNI plugins.

| Feature | Coil | Flannel | Calico | Cilium |

|---|---|---|---|---|

| Address pools | Yes | No | Yes | Poor (1) |

| Egress NAT | Yes | No | Poor (2) | No |

| IPv4/IPv6/Dual | Yes | v4 only | Yes | Yes |

| Routing protocol | Any | overlay | BGP/overlay | Any |

| NetworkPolicy | No (3) | No (3) | Yes | Yes |

- Cilium has

CiliumNodecustom resource for configuring IPAM on a per-node basis. - Calico has a feature called

natOutgoingfor configuring each Node to act as a NAT server. This is useful only when nodes can communicate with external networks. - Coil and Flannel can be combined with Calico or Cilium to add NetworkPolicy support.

Usage

Running on kind clusters

kind is a tool for running local Kubernetes clusters using Docker containers as nodes. Coil can replace the default network plugin of kind.

Quick start explains how to install Coil on kind clusters.

Production deployment

You can choose the optional features by editing kustomization.yaml and netconf.json.

Installation is as easy as just running kustomize build v2, and applying the generated manifests.

Detailed deployment instructions are available.

Use kubectl to control Coil

Coil uses Kubernetes custom resources to configure AddressPool and Egress and store status information.

So you can control Coil with kubectl.

For example, you can check the address block allocations as follows:

$ kubectl get addressblocks.coil.cybozu.com | head NAME NODE POOL IPV4 IPV6 default-0 10.69.0.4 default 10.64.0.0/27 default-1 10.69.0.203 default 10.64.0.32/27 default-10 10.69.0.5 default 10.64.1.64/27 default-11 10.69.1.141 default 10.64.1.96/27

Try it!

Coil is available on GitHub for everyone. Please try it. We welcome your feedback!