By Akihiro Ikezoe, Hirotaka Yamamoto

Have you ever experienced that your Kubernetes cluster cannot create new Pods because Docker Hub or other container registries are down?

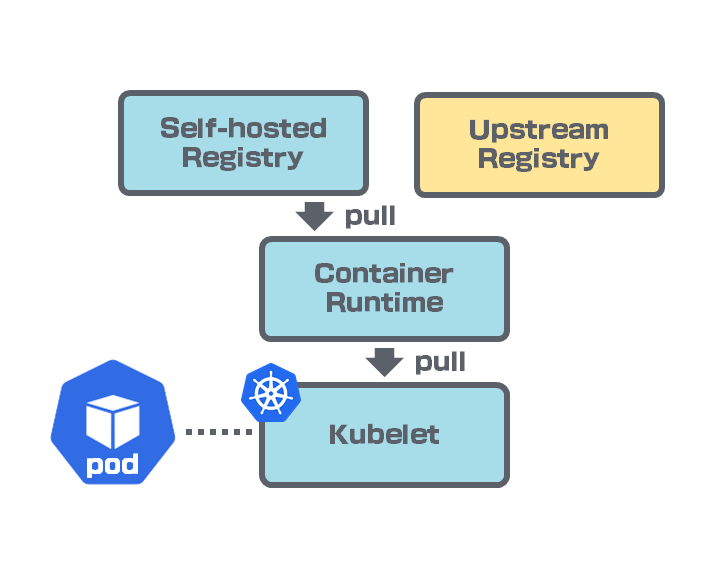

This article will show you several ways to ensure your Kubernetes clusters can always pull images even while an upstream registry is failing. We also describe why we chose to self-host container image registry as a pull-through cache for an upstream registry.

- Benefits of having a local registry

- Software for self-hosting registries

- How to switch registries transparently

- Limitations and caveats

- Our implementation

- Summary

Benefits of having a local registry

Many container images are hosted on public image registries including Docker Hub, quay.io, or GitHub Container Registry (GHCR).

If you have a Deployment that depends on these public registries, you might not be able to create new Pods while the registry is failing. This would cause services running on your Kubernetes cluster to be disrupted.

A local, self-hosted registry can resolve this problem. If you have the same image in the local registry as in the public registry, then when the public registry is down, you can fetch the image from the local registry instead.

Having a local registry has more benefits including:

- No rate limits, unlike Docker Hub.

- Faster fetch time

- Less bandwidth usage

Software for self-hosting registries

Registry

github.com/distribution/distribution includes a simple image registry software called registry.

Harbor

Harbor is a feature rich registry software.

Dragonfly

Dragonfly is an open-source P2P-based Image and file distribution system.

How to switch registries transparently

A Pod in Kubernetes specifies container images with a registry as follows:

spec:

containers:

- name: ubuntu

image: quay.io/cybozu/ubuntu:20.04

In the above example, quay.io is the registry. If registry is omitted, docker.io is normally used.

Suppose that the registry is down and we need to switch the registry from quay.io to something like local.registry.svc.

You can, of course, edit Deployment or StatefulSet to point to the local registry.

That said, this can be cumbersome when you have a lot of Deployments or StatefulSets.

There are three ways to avoid editing manifests manually, that is:

- Registry mirror & pull-through cache registry

- Man-in-the-middle proxy

- Mutating admission webhook

Registry mirror & pull-through cache registry

Most container runtimes, including containerd and cri-o, can configure registry mirrors.

- Docker - Configure the Docker daemon

- Containerd - Configure Registry Endpoint

- containers/image (used inside

cri-o) - Remapping and mirroring registries

The configuration below is an example for containerd to configure local.registry.svc as a mirror of quay.io.

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"]

endpoint = ["http://local.registry.svc", "https://quay.io"]

With this configuration, containerd will look local.registry.svc first when it fetches an image on quay.io.

If local.registry.svc is down, containerd falls back to quay.io.

Now, we have multiple registries available for an image. The remaining problem is how to pre-load the image into the local mirror registry.

Instead of manually pushing the necessary images one by one to the local registry, you may use "pull-through cache" feature.

Pull-through cache is available in both distribution's registry and Harbor.

- Docker Registry - Registry as a pull through cache

- Harbor - Configuring Harbor as a local registry mirror

With this feature enabled, the local registry can transparently fetch the requested image from the upstream registry and cache it. For subsequent requests, the local registry will be able to return the cached image.

Man-in-the-middle proxy

This method is to use a local registry as a man-in-the-middle proxy for the upstream registry.

The container runtime such as containerd tries to fetch an image from the upstream registry through an HTTPS proxy server.

The HTTPS proxy server intercepts the communication and caches the requested image.

To do so, a root CA certificate needs to be injected to the container runtime.

rpardini/docker-registry-proxy is one of such an implementation.

One apparent drawback to this approach is that the man-in-the-middle proxy could be another single-point-of-failure.

In case that your Kubernetes clusters' container runtime is Docker, this method may be appropriate since you cannot set up mirrors except for Docker Hub.

Mutating admission webhook

This method is to dynamically mutate the container image registry by a Mutating Admission Webhook when a Pod is created.

docker-proxy-webhook is one of such an implementation.

It remains a problem how to fall back to the upstream registry when the local registry goes down.

Limitations and caveats

There are some limitations or caveats on implementing a pull-through cache registry as a mirror of an upstream registry.

Docker can have mirrors only for Docker Hub

Although it has been discussed for years to extend support, Docker cannot have mirrors other than for Docker Hub.

Note that this is a problem of Docker, not a registry or containerd problem.

In addition, it is recommend to use a container runtime other than Docker because dockershim has been deprecated since Kubernetes 1.20.

Harbor only supports proxy caching for Docker Hub and Harbor registries

You can find the description at https://goharbor.io/docs/2.1.0/administration/configure-proxy-cache/ .

Harbor only supports proxy caching for Docker Hub and Harbor registries.

registry, on the other hand, can work with Docker Hub, quay.io, and GitHub Container Registry.

Only one upstream per pull-through cache registry

An instance of registry or Harbor can have only one upstream registry for pull-through cache.

If you have multiple upstream registries, you need to prepare as many local registries as the upstream registries.

imagePullSecrets are not passed to pull through cache registries

To access private image repositories, Kubernetes Pods can specify imagePullSecrets that stores a credential of the repository.

Container runtimes, including Docker, containerd, and cri-o, do not pass imagePullSecrets to local mirrors.

Instead, a local pull-through cache registry needs to be configured to have a credential to its upstream registry to fetch private images.

Note that the cached private images will be available for everyone that can access the local registry.

Note also that both registry and Harbor can have only one credential for the upstream registry.

For this reason, the single credential needs to be allowed to access any private image on the upstream registry.

Our implementation

Our Kubernetes clusters use containerd as the container runtime and use images on quay.io and Github Container Registry.

Given these conditions and the aforementioned limitations, we chose to deploy registry as a pull-through cache and configure containerd to use registry as a mirror.

If you are interested, you can check out our manifests and configurations:

Summary

We discuss pros and cons of three methods for how to tolerate failures in container image registries.

- Configure the container runtime to use a pull-through cache registry as a mirror of the upstream registry

- Deploy a man-in-the-middle proxy and use it as a pull-through cache

- Use a mutating admission webhook to dynamically edit Pods to reference local mirrors

It depends on your environment which method is best for you. We hope this article will help guide you to find your way.